IMHO: Why #claudecode has quality shifts. It's

* not prompting

* not context

* not the problem type

It's not you. Or your project.

It's #Anthropic. They use quantized models (during the daytime) to scale the load. #Anthropic doesn't make that transparent to the users. No inference provider does. But it's cheaper for the provider.

Quantized models are smaller / down-scaled variants with less precision. Less capability. And less usefulness, therefore. Cheaper for the provider. Customers won't know. But pay the same.

Given that even a 200 USD subscription results in down-scaled LLM service quality, you need to consider

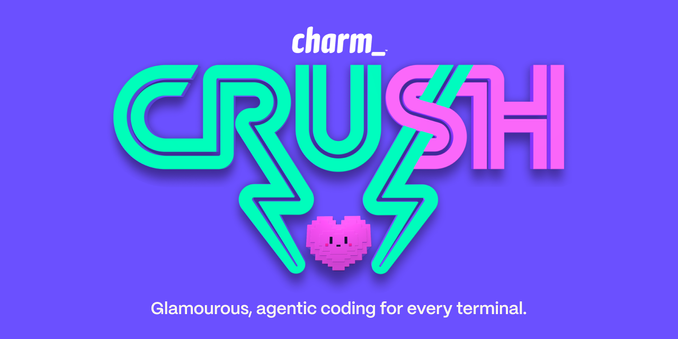

a) self-hosting

b) use open-source clients.

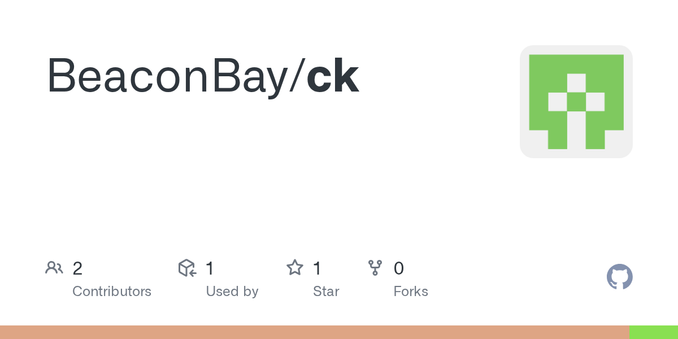

Option 1:

https://github.com/charmbracelet/crush

* OpenRouter models: Gemini, Grok

* self-hosted models: Qwen Code, Kimi K2

Self-hosting option with Modal:

https://modal.com/docs/examples/vllm_inference

Option 2:

https://github.com/openai/codex

For me, #codex is slow.

Option 3:

https://github.com/musistudio/claude-code-router

Hacks like CCR (Claude Code Router) with the aforementioned models are not mid-term solutions.

Option 4:

A #gemini-cli fork:

https://github.com/acoliver/llxprt-code

I started to use this with various models, depending on the task.

What do Options 1 to 4 have in common? You cannot rely on the providers.